Rally 2.3.0¶

You want to benchmark Elasticsearch? Then Rally is for you. It can help you with the following tasks:

- Setup and teardown of an Elasticsearch cluster for benchmarking

- Management of benchmark data and specifications even across Elasticsearch versions

- Running benchmarks and recording results

- Finding performance problems by attaching so-called telemetry devices

- Comparing performance results

We have also put considerable effort in Rally to ensure that benchmarking data are reproducible.

Getting Help or Contributing to Rally¶

- Use our Discuss forum to provide feedback or ask questions about Rally.

- See our contribution guide on guidelines for contributors.

Source Code¶

Rally’s source code is available on Github. You can also check the changelog and the roadmap there.

Quickstart¶

Rally is developed for Unix and is actively tested on Linux and macOS. Rally supports benchmarking Elasticsearch clusters running on Windows but Rally itself needs to be installed on machines running Unix.

Install¶

Install Python 3.8+ including pip3, git 1.9+ and an appropriate JDK to run Elasticsearch Be sure that JAVA_HOME points to that JDK. Then run the following command, optionally prefixed by sudo if necessary:

pip3 install esrally

If you have any trouble or need more detailed instructions, look in the detailed installation guide.

Run your first race¶

Now we’re ready to run our first race:

esrally race --distribution-version=6.5.3 --track=geonames

This will download Elasticsearch 6.5.3 and run the geonames track against it. After the race, a summary report is written to the command line::

------------------------------------------------------

_______ __ _____

/ ____(_)___ ____ _/ / / ___/_________ ________

/ /_ / / __ \/ __ `/ / \__ \/ ___/ __ \/ ___/ _ \

/ __/ / / / / / /_/ / / ___/ / /__/ /_/ / / / __/

/_/ /_/_/ /_/\__,_/_/ /____/\___/\____/_/ \___/

------------------------------------------------------

| Lap | Metric | Task | Value | Unit |

|------:|----------------------------------------------------------------:|-----------------------:|----------:|--------:|

| All | Cumulative indexing time of primary shards | | 54.5878 | min |

| All | Min cumulative indexing time across primary shards | | 10.7519 | min |

| All | Median cumulative indexing time across primary shards | | 10.9219 | min |

| All | Max cumulative indexing time across primary shards | | 11.1754 | min |

| All | Cumulative indexing throttle time of primary shards | | 0 | min |

| All | Min cumulative indexing throttle time across primary shards | | 0 | min |

| All | Median cumulative indexing throttle time across primary shards | | 0 | min |

| All | Max cumulative indexing throttle time across primary shards | | 0 | min |

| All | Cumulative merge time of primary shards | | 20.4128 | min |

| All | Cumulative merge count of primary shards | | 136 | |

| All | Min cumulative merge time across primary shards | | 3.82548 | min |

| All | Median cumulative merge time across primary shards | | 4.1088 | min |

| All | Max cumulative merge time across primary shards | | 4.38148 | min |

| All | Cumulative merge throttle time of primary shards | | 1.17975 | min |

| All | Min cumulative merge throttle time across primary shards | | 0.1169 | min |

| All | Median cumulative merge throttle time across primary shards | | 0.26585 | min |

| All | Max cumulative merge throttle time across primary shards | | 0.291033 | min |

| All | Cumulative refresh time of primary shards | | 7.0317 | min |

| All | Cumulative refresh count of primary shards | | 420 | |

| All | Min cumulative refresh time across primary shards | | 1.37088 | min |

| All | Median cumulative refresh time across primary shards | | 1.4076 | min |

| All | Max cumulative refresh time across primary shards | | 1.43343 | min |

| All | Cumulative flush time of primary shards | | 0.599417 | min |

| All | Cumulative flush count of primary shards | | 10 | |

| All | Min cumulative flush time across primary shards | | 0.0946333 | min |

| All | Median cumulative flush time across primary shards | | 0.118767 | min |

| All | Max cumulative flush time across primary shards | | 0.14145 | min |

| All | Median CPU usage | | 284.4 | % |

| All | Total Young Gen GC time | | 12.868 | s |

| All | Total Young Gen GC count | | 17 | |

| All | Total Old Gen GC time | | 3.803 | s |

| All | Total Old Gen GC count | | 2 | |

| All | Store size | | 3.17241 | GB |

| All | Translog size | | 2.62736 | GB |

| All | Index size | | 5.79977 | GB |

| All | Total written | | 22.8536 | GB |

| All | Heap used for segments | | 18.8885 | MB |

| All | Heap used for doc values | | 0.0322647 | MB |

| All | Heap used for terms | | 17.7184 | MB |

| All | Heap used for norms | | 0.0723877 | MB |

| All | Heap used for points | | 0.277171 | MB |

| All | Heap used for stored fields | | 0.788307 | MB |

| All | Segment count | | 94 | |

| All | Min Throughput | index-append | 38089.5 | docs/s |

| All | Mean Throughput | index-append | 38325.2 | docs/s |

| All | Median Throughput | index-append | 38613.9 | docs/s |

| All | Max Throughput | index-append | 40693.3 | docs/s |

| All | 50th percentile latency | index-append | 803.417 | ms |

| All | 90th percentile latency | index-append | 1913.7 | ms |

| All | 99th percentile latency | index-append | 3591.23 | ms |

| All | 99.9th percentile latency | index-append | 6176.23 | ms |

| All | 100th percentile latency | index-append | 6642.97 | ms |

| All | 50th percentile service time | index-append | 803.417 | ms |

| All | 90th percentile service time | index-append | 1913.7 | ms |

| All | 99th percentile service time | index-append | 3591.23 | ms |

| All | 99.9th percentile service time | index-append | 6176.23 | ms |

| All | 100th percentile service time | index-append | 6642.97 | ms |

| All | error rate | index-append | 0 | % |

| All | ... | ... | ... | ... |

| All | ... | ... | ... | ... |

| All | Min Throughput | large_prohibited_terms | 2 | ops/s |

| All | Mean Throughput | large_prohibited_terms | 2 | ops/s |

| All | Median Throughput | large_prohibited_terms | 2 | ops/s |

| All | Max Throughput | large_prohibited_terms | 2 | ops/s |

| All | 50th percentile latency | large_prohibited_terms | 344.429 | ms |

| All | 90th percentile latency | large_prohibited_terms | 353.187 | ms |

| All | 99th percentile latency | large_prohibited_terms | 377.22 | ms |

| All | 100th percentile latency | large_prohibited_terms | 392.918 | ms |

| All | 50th percentile service time | large_prohibited_terms | 341.177 | ms |

| All | 90th percentile service time | large_prohibited_terms | 349.979 | ms |

| All | 99th percentile service time | large_prohibited_terms | 374.958 | ms |

| All | 100th percentile service time | large_prohibited_terms | 388.62 | ms |

| All | error rate | large_prohibited_terms | 0 | % |

----------------------------------

[INFO] SUCCESS (took 1862 seconds)

----------------------------------

Next steps¶

Now you can check how to run benchmarks, get a better understanding how to interpret the numbers in the summary report, configure Rally to better suit your needs or start to create your own tracks. Be sure to check also some tips and tricks to help you understand how to solve specific problems in Rally.

Also run esrally --help to see what options are available and keep the command line reference handy for more detailed explanations of each option.

Installation¶

This is the detailed installation guide for Rally. If you are in a hurry you can check the quickstart guide.

Hardware Requirements¶

Use an SSD on the load generator machine. If you run bulk-indexing benchmarks, Rally will read one or more data files from disk. Usually, you will configure multiple clients and each client reads a portion of the data file. To the disk this appears as a random access pattern where spinning disks perform poorly. To avoid an accidental bottleneck on client-side you should therefore use an SSD on each load generator machine.

Prerequisites¶

Rally does not support Windows and is only actively tested on macOS and Linux. Install the following packages first.

Python¶

- Python 3.8 or better available as

python3on the path. Verify with:python3 --version. - Python3 header files (included in the Python3 development package).

pip3available on the path. Verify withpip3 --version.

We recommend to use pyenv to manage installation of Python. For details refer to their installation instructions and ensure that all of pyenv’s prerequisites are installed.

Once pyenv is installed, install a compatible Python version:

# Install Python

pyenv install 3.8.10

# select that version for the current user

# see https://github.com/pyenv/pyenv/blob/master/COMMANDS.md#pyenv-global for details

pyenv global 3.8.10

# Upgrade pip

python3 -m pip install --user --upgrade pip

git¶

Git is not required if all of the following conditions are met:

- You are using Rally only as a load generator (

--pipeline=benchmark-only) or you are referring to Elasticsearch configurations with--team-path. - You create your own tracks and refer to them with

--track-path.

In all other cases, Rally requires git 1.9 or better. Verify with git --version.

Debian / Ubuntu

sudo apt-get install git

Red Hat / CentOS / Amazon Linux

sudo yum install git

Note

If you use RHEL, install a recent version of git via the Red Hat Software Collections.

macOS

git is already installed on macOS.

pbzip2¶

It is strongly recommended to install pbzip2 to speed up decompressing the corpora of Rally standard tracks.

If you have created custom tracks using corpora compressed with gzip instead of bzip2, it’s also advisable to install pigz to speed up the process.

Debian / Ubuntu

sudo apt-get install pbzip2

Red Hat / CentOS / Amazon Linux

pbzip is available via the EPEL repository.

sudo yum install pbzip2

macOS

Install via Homebrew:

brew install pbzip2

JDK¶

A JDK is required on all machines where you want to launch Elasticsearch. If you use Rally just as a load generator to benchmark remote clusters, no JDK is required. For details on how to install a JDK check your operating system’s documentation pages.

To find the JDK, Rally expects the environment variable JAVA_HOME to be set on all targeted machines. To have more specific control, for example when you want to benchmark across a wide range of Elasticsearch releases, you can also set JAVAx_HOME where x is the major version of a JDK (e.g. JAVA8_HOME would point to a JDK 8 installation). Rally will then choose the highest supported JDK per version of Elasticsearch that is available.

Note

If you have Rally download, install and benchmark a local copy of Elasticsearch (i.e., the default Rally behavior) be sure to configure the Operating System (OS) of your Rally server with the recommended kernel settings

Optional dependencies¶

S3 support is optional and can be installed using the s3 extra. If you need S3 support, install esrally[s3] instead of just esrally, but other than that follow the instructions below.

Installing Rally¶

- Ensure

~/.local/binis in your$PATH. - Ensure pip is the latest version:

python3 -m pip install --user --upgrade pip - Install Rally:

python3 -m pip install --user esrally.

Virtual environment Install¶

You can also use virtualenv to install Rally into an isolated Python environment without sudo.

Set up a new virtualenv environment in a directory with

python3 -m venv .venvActivate the environment with

source /path/to/virtualenv/.venv/bin/activate- Ensure pip is the latest version:

python3 -m pip install --upgrade pip Important

Omitting this step might cause the next step (Rally installation) to fail due to broken dependencies. The pip version must be at minimum

20.3.

- Ensure pip is the latest version:

Install Rally with

python3 -m pip install esrally

Whenever you want to use Rally, run the activation script (step 2 above) first. When you are done, simply execute deactivate in the shell to exit the virtual environment.

Docker¶

Docker images of Rally can be found in Docker Hub.

Please refer to Running Rally with Docker for detailed instructions.

Offline Install¶

If you are in a corporate environment using Linux servers that do not have any access to the Internet, you can use Rally’s offline installation package. Follow these steps to install Rally:

- Install all prerequisites as documented above.

- Download the offline installation package for the latest release and copy it to the target machine(s).

- Decompress the installation package with

tar -xzf esrally-dist-linux-*.tar.gz. - Run the install script with

sudo ./esrally-dist-linux-*/install.sh.

Next Steps¶

On the first invocation Rally creates a default configuration file which you can customize. Follow the configuration help page for more guidance.

Running Rally with Docker¶

Rally is available as a Docker image.

Limitations¶

The following Rally functionality isn’t supported when using the Docker image:

- Distributing the load test driver to apply load from multiple machines.

- Using other pipelines apart from

benchmark-only.

Quickstart¶

You can test the Rally Docker image by first issuing a simple command to list the available tracks:

$ docker run elastic/rally list tracks

____ ____

/ __ \____ _/ / /_ __

/ /_/ / __ `/ / / / / /

/ _, _/ /_/ / / / /_/ /

/_/ |_|\__,_/_/_/\__, /

/____/

Available tracks:

Name Description Documents Compressed Size Uncompressed Size Default Challenge All Challenges

------------- --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- ----------- ----------------- ------------------- ----------------------- ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

eql EQL benchmarks based on endgame index of SIEM demo cluster 60,782,211 4.5 GB 109.2 GB default default

eventdata This benchmark indexes HTTP access logs generated based sample logs from the elastic.co website using the generator available in https://github.com/elastic/rally-eventdata-track 20,000,000 756.0 MB 15.3 GB append-no-conflicts append-no-conflicts,transform

geonames POIs from Geonames 11,396,503 252.9 MB 3.3 GB append-no-conflicts append-no-conflicts,append-no-conflicts-index-only,append-sorted-no-conflicts,append-fast-with-conflicts,significant-text

geopoint Point coordinates from PlanetOSM 60,844,404 482.1 MB 2.3 GB append-no-conflicts append-no-conflicts,append-no-conflicts-index-only,append-fast-with-conflicts

geopointshape Point coordinates from PlanetOSM indexed as geoshapes 60,844,404 470.8 MB 2.6 GB append-no-conflicts append-no-conflicts,append-no-conflicts-index-only,append-fast-with-conflicts

geoshape Shapes from PlanetOSM 60,523,283 13.4 GB 45.4 GB append-no-conflicts append-no-conflicts

http_logs HTTP server log data 247,249,096 1.2 GB 31.1 GB append-no-conflicts append-no-conflicts,runtime-fields,append-no-conflicts-index-only,append-sorted-no-conflicts,append-index-only-with-ingest-pipeline,update,append-no-conflicts-index-reindex-only

metricbeat Metricbeat data 1,079,600 87.7 MB 1.2 GB append-no-conflicts append-no-conflicts

nested StackOverflow Q&A stored as nested docs 11,203,029 663.3 MB 3.4 GB nested-search-challenge nested-search-challenge,index-only

noaa Global daily weather measurements from NOAA 33,659,481 949.4 MB 9.0 GB append-no-conflicts append-no-conflicts,append-no-conflicts-index-only,top_metrics,aggs

nyc_taxis Taxi rides in New York in 2015 165,346,692 4.5 GB 74.3 GB append-no-conflicts append-no-conflicts,append-no-conflicts-index-only,append-sorted-no-conflicts-index-only,update,append-ml,date-histogram,indexing-querying

percolator Percolator benchmark based on AOL queries 2,000,000 121.1 kB 104.9 MB append-no-conflicts append-no-conflicts

pmc Full text benchmark with academic papers from PMC 574,199 5.5 GB 21.7 GB append-no-conflicts append-no-conflicts,append-no-conflicts-index-only,append-sorted-no-conflicts,append-fast-with-conflicts,indexing-querying

so Indexing benchmark using up to questions and answers from StackOverflow 36,062,278 8.9 GB 33.1 GB append-no-conflicts append-no-conflicts

-------------------------------

[INFO] SUCCESS (took 3 seconds)

-------------------------------

As a next step, we assume that Elasticsearch is running on es01:9200 and is accessible from the host where you are running the Rally Docker image.

Run the nyc_taxis track in test-mode using:

$ docker run elastic/rally race --track=nyc_taxis --test-mode --pipeline=benchmark-only --target-hosts=es01:9200

Note

We didn’t need to explicitly specify esrally as we’d normally do in a normal CLI invocation; the entrypoint in the Docker image does this automatically.

Now you are able to use all regular Rally commands, bearing in mind the aforementioned limitations.

Configuration¶

The Docker image ships with a default configuration file under /rally/.rally/rally.ini.

To customize Rally you can create your own rally.ini and bind mount it using:

docker run -v /home/<myuser>/custom_rally.ini:/rally/.rally/rally.ini elastic/rally ...

Persistence¶

It is highly recommended to use a local bind mount (or a named volume) for the directory /rally/.rally in the container.

This will ensure you have persistence across invocations and any tracks downloaded and extracted can be reused, reducing the startup time.

You need to ensure the UID is 1000 (or GID is 0 especially in OpenShift) so that Rally can write to the bind-mounted directory.

If your local bind mount doesn’t contain a rally.ini the container will create one for you during the first run.

Example:

mkdir myrally

sudo chgrp 0 myrally

# First run will also generate the rally.ini

docker run --rm -v $PWD/myrally:/rally/.rally elastic/rally race --track=nyc_taxis --test-mode --pipeline=benchmark-only --target-hosts=es01:9200

...

# inspect results

$ tree myrally/benchmarks/races/

myrally/benchmarks/races/

└── 1d81930a-4ebe-4640-a09b-3055174bce43

└── race.json

1 directory, 1 file

In case you forgot to bind mount a directory, the Rally Docker image will create an anonymous volume for /rally/.rally to ensure logs and results get persisted even after the container has terminated.

For example, after executing our earlier quickstart example docker run elastic/rally race --track=nyc_taxis --test-mode --pipeline=benchmark-only --target-hosts=es01:9200, docker volume ls shows a volume:

$ docker volume ls

DRIVER VOLUME NAME

local 96256462c3a1f61120443e6d69d9cb0091b28a02234318bdabc52b6801972199

To further examine the contents we can bind mount it from another image e.g.:

$ docker run --rm -i -v=96256462c3a1f61120443e6d69d9cb0091b28a02234318bdabc52b6801972199:/rallyvolume -ti python:3.8.12-slim-bullseye /bin/bash

root@9a7dd7b3d8df:/# cd /rallyvolume/

root@9a7dd7b3d8df:/rallyvolume# ls

root@9a7dd7b3d8df:/rallyvolume/.rally# ls

benchmarks logging.json logs rally.ini

# head -4 benchmarks/races/1d81930a-4ebe-4640-a09b-3055174bce43/race.json

{

"rally-version": "2.3.0",

"environment": "local",

"race-id": "1d81930a-4ebe-4640-a09b-3055174bce43",

Specifics about the image¶

Rally runs as user 1000 and its files are installed with uid:gid 1000:0 (to support OpenShift arbitrary user IDs).

Extending the Docker image¶

You can also create your own customized Docker image on top of the existing one. The example below shows how to get started:

FROM elastic/rally:2.3.0 COPY --chown=1000:0 rally.ini /rally/.rally/

You can then build and test the image with:

docker build --tag=custom-rally .

docker run -ti custom-rally list tracks

Run a Benchmark: Races¶

Definition¶

A “race” in Rally is the execution of a benchmarking experiment. You can choose different benchmarking scenarios (called tracks) for your benchmarks.

List Tracks¶

Start by finding out which tracks are available:

esrally list tracks

This will show the following list:

Name Description Documents Compressed Size Uncompressed Size Default Challenge All Challenges

------------- --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- ----------- ----------------- ------------------- ----------------------- ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

eql EQL benchmarks based on endgame index of SIEM demo cluster 60,782,211 4.5 GB 109.2 GB default default

eventdata This benchmark indexes HTTP access logs generated based sample logs from the elastic.co website using the generator available in https://github.com/elastic/rally-eventdata-track 20,000,000 756.0 MB 15.3 GB append-no-conflicts append-no-conflicts,transform

geonames POIs from Geonames 11,396,503 252.9 MB 3.3 GB append-no-conflicts append-no-conflicts,append-no-conflicts-index-only,append-sorted-no-conflicts,append-fast-with-conflicts,significant-text

geopoint Point coordinates from PlanetOSM 60,844,404 482.1 MB 2.3 GB append-no-conflicts append-no-conflicts,append-no-conflicts-index-only,append-fast-with-conflicts

geopointshape Point coordinates from PlanetOSM indexed as geoshapes 60,844,404 470.8 MB 2.6 GB append-no-conflicts append-no-conflicts,append-no-conflicts-index-only,append-fast-with-conflicts

geoshape Shapes from PlanetOSM 60,523,283 13.4 GB 45.4 GB append-no-conflicts append-no-conflicts

http_logs HTTP server log data 247,249,096 1.2 GB 31.1 GB append-no-conflicts append-no-conflicts,runtime-fields,append-no-conflicts-index-only,append-sorted-no-conflicts,append-index-only-with-ingest-pipeline,update,append-no-conflicts-index-reindex-only

metricbeat Metricbeat data 1,079,600 87.7 MB 1.2 GB append-no-conflicts append-no-conflicts

nested StackOverflow Q&A stored as nested docs 11,203,029 663.3 MB 3.4 GB nested-search-challenge nested-search-challenge,index-only

noaa Global daily weather measurements from NOAA 33,659,481 949.4 MB 9.0 GB append-no-conflicts append-no-conflicts,append-no-conflicts-index-only,top_metrics,aggs

nyc_taxis Taxi rides in New York in 2015 165,346,692 4.5 GB 74.3 GB append-no-conflicts append-no-conflicts,append-no-conflicts-index-only,append-sorted-no-conflicts-index-only,update,append-ml,date-histogram,indexing-querying

percolator Percolator benchmark based on AOL queries 2,000,000 121.1 kB 104.9 MB append-no-conflicts append-no-conflicts

pmc Full text benchmark with academic papers from PMC 574,199 5.5 GB 21.7 GB append-no-conflicts append-no-conflicts,append-no-conflicts-index-only,append-sorted-no-conflicts,append-fast-with-conflicts,indexing-querying

so Indexing benchmark using up to questions and answers from StackOverflow 36,062,278 8.9 GB 33.1 GB append-no-conflicts append-no-conflicts

The first two columns show the name and a description of each track. A track also specifies one or more challenges which describe the workload to run.

Starting a Race¶

Note

Do not run Rally as root as Elasticsearch will refuse to start with root privileges.

To start a race you have to define the track and challenge to run. For example:

esrally race --distribution-version=6.0.0 --track=geopoint --challenge=append-fast-with-conflicts

Rally will then start racing on this track. If you have never started Rally before, it should look similar to the following output:

$ esrally race --distribution-version=6.0.0 --track=geopoint --challenge=append-fast-with-conflicts

____ ____

/ __ \____ _/ / /_ __

/ /_/ / __ `/ / / / / /

/ _, _/ /_/ / / / /_/ /

/_/ |_|\__,_/_/_/\__, /

/____/

[INFO] Racing on track [geopoint], challenge [append-fast-with-conflicts] and car ['defaults'] with version [6.0.0].

[INFO] Downloading Elasticsearch 6.0.0 ... [OK]

[INFO] Rally will delete the benchmark candidate after the benchmark

[INFO] Downloading data from [http://benchmarks.elasticsearch.org.s3.amazonaws.com/corpora/geopoint/documents.json.bz2] (482 MB) to [/Users/dm/.rally/benchmarks/data/geopoint/documents.json.bz2] ... [OK]

[INFO] Decompressing track data from [/Users/dm/.rally/benchmarks/data/geopoint/documents.json.bz2] to [/Users/dm/.rally/benchmarks/data/geopoint/documents.json] (resulting size: 2.28 GB) ... [OK]

[INFO] Preparing file offset table for [/Users/dm/.rally/benchmarks/data/geopoint/documents.json] ... [OK]

Running index-update [ 0% done]

The benchmark will take a while to run, so be patient.

When the race has finished, Rally will show a summary on the command line:

| Metric | Task | Value | Unit |

|--------------------------------:|-------------:|----------:|-------:|

| Total indexing time | | 124.712 | min |

| Total merge time | | 21.8604 | min |

| Total refresh time | | 4.49527 | min |

| Total merge throttle time | | 0.120433 | min |

| Median CPU usage | | 546.5 | % |

| Total Young Gen GC time | | 72.078 | s |

| Total Young Gen GC count | | 43 | |

| Total Old Gen GC time | | 3.426 | s |

| Total Old Gen GC count | | 1 | |

| Index size | | 2.26661 | GB |

| Total written | | 30.083 | GB |

| Heap used for segments | | 10.7148 | MB |

| Heap used for doc values | | 0.0135536 | MB |

| Heap used for terms | | 9.22965 | MB |

| Heap used for points | | 0.78789 | MB |

| Heap used for stored fields | | 0.683708 | MB |

| Segment count | | 115 | |

| Min Throughput | index-update | 59210.4 | docs/s |

| Mean Throughput | index-update | 60110.3 | docs/s |

| Median Throughput | index-update | 65276.2 | docs/s |

| Max Throughput | index-update | 76516.6 | docs/s |

| 50.0th percentile latency | index-update | 556.269 | ms |

| 90.0th percentile latency | index-update | 852.779 | ms |

| 99.0th percentile latency | index-update | 1854.31 | ms |

| 99.9th percentile latency | index-update | 2972.96 | ms |

| 99.99th percentile latency | index-update | 4106.91 | ms |

| 100th percentile latency | index-update | 4542.84 | ms |

| 50.0th percentile service time | index-update | 556.269 | ms |

| 90.0th percentile service time | index-update | 852.779 | ms |

| 99.0th percentile service time | index-update | 1854.31 | ms |

| 99.9th percentile service time | index-update | 2972.96 | ms |

| 99.99th percentile service time | index-update | 4106.91 | ms |

| 100th percentile service time | index-update | 4542.84 | ms |

| Min Throughput | force-merge | 0.221067 | ops/s |

| Mean Throughput | force-merge | 0.221067 | ops/s |

| Median Throughput | force-merge | 0.221067 | ops/s |

| Max Throughput | force-merge | 0.221067 | ops/s |

| 100th percentile latency | force-merge | 4523.52 | ms |

| 100th percentile service time | force-merge | 4523.52 | ms |

----------------------------------

[INFO] SUCCESS (took 1624 seconds)

----------------------------------

Note

You can save this report also to a file by using --report-file=/path/to/your/report.md and save it as CSV with --report-format=csv.

What did Rally just do?

- It downloaded and started Elasticsearch 6.0.0

- It downloaded the relevant data for the geopoint track

- It ran the actual benchmark

- And finally it reported the results

If you are curious about the operations that Rally has run, inspect the geopoint track specification or start to write your own tracks. You can also configure Rally to store all data samples in Elasticsearch so you can analyze the results with Kibana. Finally, you may want to change the Elasticsearch configuration.

Compare Results: Tournaments¶

Suppose, we want to analyze the impact of a performance improvement.

First, we need a baseline measurement. For example:

esrally race --track=pmc --revision=latest --user-tag="intention:baseline_github_1234"

Above we run the baseline measurement based on the latest source code revision of Elasticsearch. We can use the command line parameter --user-tag to provide a key-value pair to document the intent of a race.

Then we implement our changes and finally we want to run another benchmark to see the performance impact of the change. In that case, we do not want Rally to change our source tree and thus specify the pseudo-revision current:

esrally race --track=pmc --revision=current --user-tag="intention:reduce_alloc_1234"

After we’ve run both races, we want to know about the performance impact. With Rally we can analyze differences of two given races easily. First of all, we need to find two races to compare by issuing esrally list races:

$ esrally list races

____ ____

/ __ \____ _/ / /_ __

/ /_/ / __ `/ / / / / /

/ _, _/ /_/ / / / /_/ /

/_/ |_|\__,_/_/_/\__, /

/____/

Recent races:

Race ID Race Timestamp Track Track Parameters Challenge Car User Tags

------------------------------------ ---------------- ------- ------------------ ------------------ -------- ------------------------------

beb154e4-0a05-4f45-ad9f-e34f9a9e51f7 20160518T122341Z pmc append-no-conflicts defaults intention:reduce_alloc_1234

0bfd4542-3821-4c79-81a2-0858636068ce 20160518T112057Z pmc append-no-conflicts defaults intention:baseline_github_1234

0cfb3576-3025-4c17-b672-d6c9e811b93e 20160518T101957Z pmc append-no-conflicts defaults

We can see that the user tag helps us to recognize races. We want to compare the two most recent races and have to provide the two race IDs in the next step:

$ esrally compare --baseline=0bfd4542-3821-4c79-81a2-0858636068ce --contender=beb154e4-0a05-4f45-ad9f-e34f9a9e51f7

____ ____

/ __ \____ _/ / /_ __

/ /_/ / __ `/ / / / / /

/ _, _/ /_/ / / / /_/ /

/_/ |_|\__,_/_/_/\__, /

/____/

Comparing baseline

Race ID: 0bfd4542-3821-4c79-81a2-0858636068ce

Race timestamp: 2016-05-18 11:20:57

Challenge: append-no-conflicts

Car: defaults

with contender

Race ID: beb154e4-0a05-4f45-ad9f-e34f9a9e51f7

Race timestamp: 2016-05-18 12:23:41

Challenge: append-no-conflicts

Car: defaults

------------------------------------------------------

_______ __ _____

/ ____(_)___ ____ _/ / / ___/_________ ________

/ /_ / / __ \/ __ `/ / \__ \/ ___/ __ \/ ___/ _ \

/ __/ / / / / / /_/ / / ___/ / /__/ /_/ / / / __/

/_/ /_/_/ /_/\__,_/_/ /____/\___/\____/_/ \___/

------------------------------------------------------

Metric Baseline Contender Diff

-------------------------------------------------------- ---------- ----------- -----------------

Min Indexing Throughput [docs/s] 19501 19118 -383.00000

Median Indexing Throughput [docs/s] 20232 19927.5 -304.45833

Max Indexing Throughput [docs/s] 21172 20849 -323.00000

Total indexing time [min] 55.7989 56.335 +0.53603

Total merge time [min] 12.9766 13.3115 +0.33495

Total refresh time [min] 5.20067 5.20097 +0.00030

Total flush time [min] 0.0648667 0.0681833 +0.00332

Total merge throttle time [min] 0.796417 0.879267 +0.08285

Query latency term (50.0 percentile) [ms] 2.10049 2.15421 +0.05372

Query latency term (90.0 percentile) [ms] 2.77537 2.84168 +0.06630

Query latency term (100.0 percentile) [ms] 4.52081 5.15368 +0.63287

Query latency country_agg (50.0 percentile) [ms] 112.049 110.385 -1.66392

Query latency country_agg (90.0 percentile) [ms] 128.426 124.005 -4.42138

Query latency country_agg (100.0 percentile) [ms] 155.989 133.797 -22.19185

Query latency scroll (50.0 percentile) [ms] 16.1226 14.4974 -1.62519

Query latency scroll (90.0 percentile) [ms] 17.2383 15.4079 -1.83043

Query latency scroll (100.0 percentile) [ms] 18.8419 18.4241 -0.41784

Query latency country_agg_cached (50.0 percentile) [ms] 1.70223 1.64502 -0.05721

Query latency country_agg_cached (90.0 percentile) [ms] 2.34819 2.04318 -0.30500

Query latency country_agg_cached (100.0 percentile) [ms] 3.42547 2.86814 -0.55732

Query latency default (50.0 percentile) [ms] 5.89058 5.83409 -0.05648

Query latency default (90.0 percentile) [ms] 6.71282 6.64662 -0.06620

Query latency default (100.0 percentile) [ms] 7.65307 7.3701 -0.28297

Query latency phrase (50.0 percentile) [ms] 1.82687 1.83193 +0.00506

Query latency phrase (90.0 percentile) [ms] 2.63714 2.46286 -0.17428

Query latency phrase (100.0 percentile) [ms] 5.39892 4.22367 -1.17525

Median CPU usage (index) [%] 668.025 679.15 +11.12499

Median CPU usage (stats) [%] 143.75 162.4 +18.64999

Median CPU usage (search) [%] 223.1 229.2 +6.10000

Total Young Gen GC time [s] 39.447 40.456 +1.00900

Total Young Gen GC count 10 11 +1.00000

Total Old Gen GC time [s] 7.108 7.703 +0.59500

Total Old Gen GC count 10 11 +1.00000

Index size [GB] 3.25475 3.25098 -0.00377

Total written [GB] 17.8434 18.3143 +0.47083

Heap used for segments [MB] 21.7504 21.5901 -0.16037

Heap used for doc values [MB] 0.16436 0.13905 -0.02531

Heap used for terms [MB] 20.0293 19.9159 -0.11345

Heap used for norms [MB] 0.105469 0.0935669 -0.01190

Heap used for points [MB] 0.773487 0.772155 -0.00133

Heap used for points [MB] 0.677795 0.669426 -0.00837

Segment count 136 121 -15.00000

Indices Stats(90.0 percentile) [ms] 3.16053 3.21023 +0.04969

Indices Stats(99.0 percentile) [ms] 5.29526 3.94132 -1.35393

Indices Stats(100.0 percentile) [ms] 5.64971 7.02374 +1.37403

Nodes Stats(90.0 percentile) [ms] 3.19611 3.15251 -0.04360

Nodes Stats(99.0 percentile) [ms] 4.44111 4.87003 +0.42892

Nodes Stats(100.0 percentile) [ms] 5.22527 5.66977 +0.44450

Setting up a Cluster¶

In this section we cover how to setup an Elasticsearch cluster with Rally. It is by no means required to use Rally for this and you can also use existing tooling like Ansible to achieve the same goal. The main difference between standard tools and Rally is that Rally is capable of setting up a wide range of Elasticsearch versions.

Warning

The following functionality is experimental. Expect the functionality and the command line interface to change significantly even in patch releases.

Overview¶

You can use the following subcommands in Rally to manage Elasticsearch nodes:

installto install a single Elasticsearch nodestartto start a previously installed Elasticsearch nodestopto stop a running Elasticsearch node and remove the installation

Each command needs to be executed locally on the machine where the Elasticsearch node should run. To setup more complex clusters remotely, we recommend using a tool like Ansible to connect to remote machines and issue these commands via Rally.

Getting Started: Benchmarking a Single Node¶

In this section we will setup a single Elasticsearch node locally, run a benchmark and then cleanup.

First we need to install Elasticearch:

esrally install --quiet --distribution-version=7.4.2 --node-name="rally-node-0" --network-host="127.0.0.1" --http-port=39200 --master-nodes="rally-node-0" --seed-hosts="127.0.0.1:39300"

The parameter --network-host defines the network interface this node will bind to and --http-port defines which port will be exposed for HTTP traffic. Rally will automatically choose the transport port range as 100 above (39300). The parameters --master-nodes and --seed-hosts are necessary for the discovery process. Please see the respective Elasticsearch documentation on discovery for more details.

This produces the following output (the value will vary for each invocation):

{

"installation-id": "69ffcfee-6378-4090-9e93-87c9f8ee59a7"

}

We will need the installation id in the next steps to refer to our current installation:

export INSTALLATION_ID=69ffcfee-6378-4090-9e93-87c9f8ee59a7

Note

You can extract the installation id value with jq with the following command: jq --raw-output '.["installation-id"]'.

After installation, we can start the node. To tie all metrics of a benchmark together, Rally needs a consistent race id across all invocations. The format of the race id does not matter but we suggest using UUIDs. You can generate a UUID on the command line with uuidgen. Issue the following command to start the node:

# generate a unique race id (use the same id from now on)

export RACE_ID=$(uuidgen)

esrally start --installation-id="${INSTALLATION_ID}" --race-id="${RACE_ID}"

After the Elasticsearch node has started, we can run a benchmark. Be sure to pass the same race id so you can match results later in your metrics store:

esrally race --pipeline=benchmark-only --target-host=127.0.0.1:39200 --track=geonames --challenge=append-no-conflicts-index-only --on-error=abort --race-id=${RACE_ID}

When the benchmark has finished, we can stop the node again:

esrally stop --installation-id="${INSTALLATION_ID}"

If you only want to shutdown the node but don’t want to delete the node and the data, pass --preserve-install additionally.

Levelling Up: Benchmarking a Cluster¶

This approach of being able to manage individual cluster nodes shows its power when we want to setup a cluster consisting of multiple nodes. At the moment Rally only supports a uniform cluster architecture but with this approach we can also setup arbitrarily complex clusters. The following examples shows how to setup a uniform three node cluster on three machines with the IPs 192.168.14.77, 192.168.14.78 and 192.168.14.79. On each machine we will issue the following command (pick the right one per machine):

# on 192.168.14.77

export INSTALLATION_ID=$(esrally install --quiet --distribution-version=7.4.2 --node-name="rally-node-0" --network-host="192.168.14.77" --http-port=39200 --master-nodes="rally-node-0,rally-node-1,rally-node-2" --seed-hosts="192.168.14.77:39300,192.168.14.78:39300,192.168.14.79:39300" | jq --raw-output '.["installation-id"]')

# on 192.168.14.78

export INSTALLATION_ID=$(esrally install --quiet --distribution-version=7.4.2 --node-name="rally-node-1" --network-host="192.168.14.78" --http-port=39200 --master-nodes="rally-node-0,rally-node-1,rally-node-2" --seed-hosts="192.168.14.77:39300,192.168.14.78:39300,192.168.14.79:39300" | jq --raw-output '.["installation-id"]')

# on 192.168.14.79

export INSTALLATION_ID=$(esrally install --quiet --distribution-version=7.4.2 --node-name="rally-node-2" --network-host="192.168.14.79" --http-port=39200 --master-nodes="rally-node-0,rally-node-1,rally-node-2" --seed-hosts="192.168.14.77:39300,192.168.14.78:39300,192.168.14.79:39300" | jq --raw-output '.["installation-id"]')

Then we pick a random race id, e.g. fb38013d-5d06-4b81-b81a-b61c8c10f6e5 and set it on each machine (including the machine where will generate load):

export RACE_ID="fb38013d-5d06-4b81-b81a-b61c8c10f6e5"

Now we can start the cluster. Run the following command on each node:

esrally start --installation-id="${INSTALLATION_ID}" --race-id="${RACE_ID}"

Once this has finished, we can check that the cluster is up and running e.g. with the _cat/health API:

curl http://192.168.14.77:39200/_cat/health\?v

We should see that our cluster consisting of three nodes is up and running:

epoch timestamp cluster status node.total node.data shards pri relo init unassign pending_tasks max_task_wait_time active_shards_percent

1574930657 08:44:17 rally-benchmark green 3 3 0 0 0 0 0 0 - 100.0%

Now we can start the benchmark on the load generator machine (remember to set the race id there):

esrally race --pipeline=benchmark-only --target-host=192.168.14.77:39200,192.168.14.78:39200,192.168.14.79:39200 --track=geonames --challenge=append-no-conflicts-index-only --on-error=abort --race-id=${RACE_ID}

Similarly to the single-node benchmark, we can now shutdown the cluster again by issuing the following command on each node:

esrally stop --installation-id="${INSTALLATION_ID}"

Tips and Tricks¶

This section covers various tips and tricks in a recipe-style fashion.

Benchmarking an Elastic Cloud cluster¶

Note

We assume in this recipe, that Rally is already properly configured.

Benchmarking an Elastic Cloud cluster with Rally is similar to benchmarking any other existing cluster. In the following example we will run a benchmark against a cluster reachable via the endpoint https://abcdef123456.europe-west1.gcp.cloud.es.io:9243 by the user elastic with the password changeme:

esrally race --track=pmc --target-hosts=abcdef123456.europe-west1.gcp.cloud.es.io:9243 --pipeline=benchmark-only --client-options="use_ssl:true,verify_certs:true,basic_auth_user:'elastic',basic_auth_password:'changeme'"

Benchmarking an existing cluster¶

Warning

If you are just getting started with Rally and don’t understand how it works, do NOT run it against any production or production-like cluster. Besides, benchmarks should be executed in a dedicated environment anyway where no additional traffic skews results.

Note

We assume in this recipe, that Rally is already properly configured.

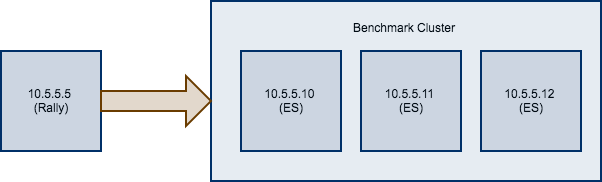

Consider the following configuration: You have an existing benchmarking cluster, that consists of three Elasticsearch nodes running on 10.5.5.10, 10.5.5.11, 10.5.5.12. You’ve setup the cluster yourself and want to benchmark it with Rally. Rally is installed on 10.5.5.5.

First of all, we need to decide on a track. So, we run esrally list tracks:

Name Description Documents Compressed Size Uncompressed Size Default Challenge All Challenges

---------- ------------------------------------------------- ----------- ----------------- ------------------- ----------------------- ---------------------------

geonames POIs from Geonames 11396505 252.4 MB 3.3 GB append-no-conflicts append-no-conflicts,appe...

geopoint Point coordinates from PlanetOSM 60844404 481.9 MB 2.3 GB append-no-conflicts append-no-conflicts,appe...

http_logs HTTP server log data 247249096 1.2 GB 31.1 GB append-no-conflicts append-no-conflicts,appe...

nested StackOverflow Q&A stored as nested docs 11203029 663.1 MB 3.4 GB nested-search-challenge nested-search-challenge,...

noaa Global daily weather measurements from NOAA 33659481 947.3 MB 9.0 GB append-no-conflicts append-no-conflicts,appe...

nyc_taxis Taxi rides in New York in 2015 165346692 4.5 GB 74.3 GB append-no-conflicts append-no-conflicts,appe...

percolator Percolator benchmark based on AOL queries 2000000 102.7 kB 104.9 MB append-no-conflicts append-no-conflicts,appe...

pmc Full text benchmark with academic papers from PMC 574199 5.5 GB 21.7 GB append-no-conflicts append-no-conflicts,appe...

We’re interested in a full text benchmark, so we’ll choose to run pmc. If you have your own data that you want to use for benchmarks create your own track instead; the metrics you’ll gather will be more representative and useful than some default track.

Next, we need to know which machines to target which is easy as we can see that from the diagram above.

Finally we need to check which pipeline to use. For this case, the benchmark-only pipeline is suitable as we don’t want Rally to provision the cluster for us.

Now we can invoke Rally:

esrally race --track=pmc --target-hosts=10.5.5.10:9200,10.5.5.11:9200,10.5.5.12:9200 --pipeline=benchmark-only

If you have X-Pack Security enabled, then you’ll also need to specify another parameter to use https and to pass credentials:

esrally race --track=pmc --target-hosts=10.5.5.10:9243,10.5.5.11:9243,10.5.5.12:9243 --pipeline=benchmark-only --client-options="use_ssl:true,verify_certs:true,basic_auth_user:'elastic',basic_auth_password:'changeme'"

Benchmarking a remote cluster¶

Contrary to the previous recipe, you want Rally to provision all cluster nodes.

We will use the following configuration for the example:

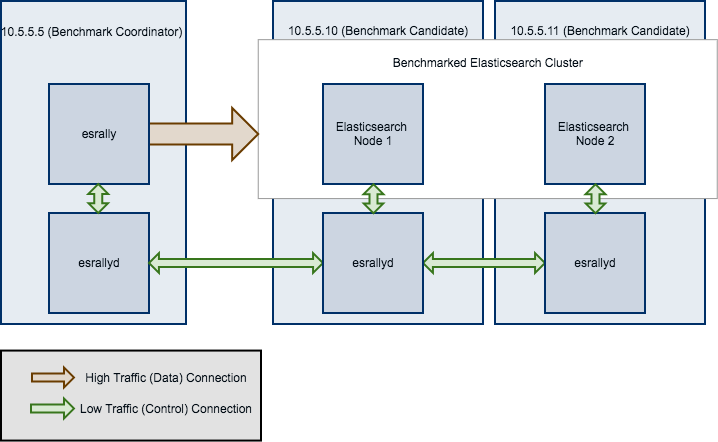

- You will start Rally on

10.5.5.5. We will call this machine the “benchmark coordinator”. - Your Elasticsearch cluster will consist of two nodes which run on

10.5.5.10and10.5.5.11. We will call these machines the “benchmark candidate”s.

Note

All esrallyd nodes form a cluster that communicates via the “benchmark coordinator”. For aesthetic reasons we do not show a direct connection between the “benchmark coordinator” and all nodes.

To run a benchmark for this scenario follow these steps:

- Install and configure Rally on all machines. Be sure that the same version is installed on all of them and fully configured.

- Start the Rally daemon on each machine. The Rally daemon allows Rally to communicate with all remote machines. On the benchmark coordinator run

esrallyd start --node-ip=10.5.5.5 --coordinator-ip=10.5.5.5and on the benchmark candidate machines runesrallyd start --node-ip=10.5.5.10 --coordinator-ip=10.5.5.5andesrallyd start --node-ip=10.5.5.11 --coordinator-ip=10.5.5.5respectively. The--node-ipparameter tells Rally the IP of the machine on which it is running. As some machines have more than one network interface, Rally will not attempt to auto-detect the machine IP. The--coordinator-ipparameter tells Rally the IP of the benchmark coordinator node. - Start the benchmark by invoking Rally as usual on the benchmark coordinator, for example:

esrally race --track=pmc --distribution-version=7.0.0 --target-hosts=10.5.5.10:39200,10.5.5.11:39200. Rally will derive from the--target-hostsparameter that it should provision the nodes10.5.5.10and10.5.5.11. - After the benchmark has finished you can stop the Rally daemon again. On the benchmark coordinator and on the benchmark candidates run

esrallyd stop.

Note

Logs are managed per machine, so all relevant log files and also telemetry output is stored on the benchmark candidates but not on the benchmark coordinator.

Now you might ask yourself what the differences to benchmarks of existing clusters are. In general you should aim to give Rally as much control as possible as benchmark are easier reproducible and you get more metrics. The following table provides some guidance on when to choose which option:

| Your requirement | Recommendation |

|---|---|

| You want to use Rally’s telemetry devices | Use Rally daemon, as it can provision the remote node for you |

| You want to benchmark a source build of Elasticsearch | Use Rally daemon, as it can build Elasticsearch for you |

| You want to tweak the cluster configuration yourself | Use Rally daemon with a custom configuration or set up the cluster by yourself and use --pipeline=benchmark-only |

| You need to run a benchmark with plugins | Use Rally daemon if the plugins are supported or set up the cluster by yourself and use --pipeline=benchmark-only |

| You need to run a benchmark against multiple nodes | Use Rally daemon if all nodes can be configured identically. For more complex cases, set up the cluster by yourself and use --pipeline=benchmark-only |

Rally daemon will be able to cover most of the cases described above in the future so there should be almost no case where you need to use the benchmark-only pipeline.

Distributing the load test driver¶

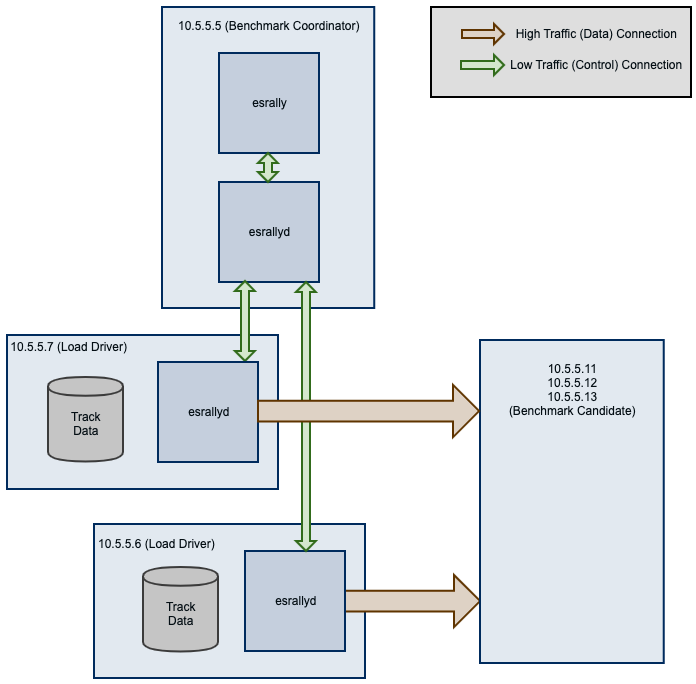

By default, Rally will generate load on the same machine where you start a benchmark. However, when you are benchmarking larger clusters, a single load test driver machine may not be able to generate sufficient load. In these cases, you should use multiple load driver machines. We will use the following configuration for the example:

- You will start Rally on

10.5.5.5. We will call this machine the “benchmark coordinator”. - You will start two load drivers on

10.5.5.6and10.5.5.7. Note that one load driver will simulate multiple clients. Rally will simply assign clients to load driver machines in a round-robin fashion. - Your Elasticsearch cluster will consist of three nodes which run on

10.5.5.11,10.5.5.12and10.5.5.13. We will call these machines the “benchmark candidate”. For simplicity, we will assume an externally provisioned cluster but you can also use Rally to setup the cluster for you (see above).

- Install and configure Rally on all machines. Be sure that the same version is installed on all of them and fully configured.

- Start the Rally daemon on each machine. The Rally daemon allows Rally to communicate with all remote machines. On the benchmark coordinator run

esrallyd start --node-ip=10.5.5.5 --coordinator-ip=10.5.5.5and on the load driver machines runesrallyd start --node-ip=10.5.5.6 --coordinator-ip=10.5.5.5andesrallyd start --node-ip=10.5.5.7 --coordinator-ip=10.5.5.5respectively. The--node-ipparameter tells Rally the IP of the machine on which it is running. As some machines have more than one network interface, Rally will not attempt to auto-detect the machine IP. The--coordinator-ipparameter tells Rally the IP of the benchmark coordinator node. - Start the benchmark by invoking Rally on the benchmark coordinator, for example:

esrally race --track=pmc --pipeline=benchmark-only --load-driver-hosts=10.5.5.6,10.5.5.7 --target-hosts=10.5.5.11:9200,10.5.5.12:9200,10.5.5.13:9200. - After the benchmark has finished you can stop the Rally daemon again. On the benchmark coordinator and on the load driver machines run

esrallyd stop.

Note

Rally neither distributes code (i.e. custom runners or parameter sources) nor data automatically. You should place all tracks and their data on all machines in the same directory before starting the benchmark. Alternatively, you can store your track in a custom track repository.

Note

As indicated in the diagram, track data will be downloaded by each load driver machine separately. If you want to avoid that, you can run a benchmark once without distributing the load test driver (i.e. do not specify --load-driver-hosts) and then copy the contents of ~/.rally/benchmarks/data to all load driver machines.

Changing the default track repository¶

Rally supports multiple track repositories. This allows you for example to have a separate company-internal repository for your own tracks that is separate from Rally’s default track repository. However, you always need to define --track-repository=my-custom-repository which can be cumbersome. If you want to avoid that and want Rally to use your own track repository by default you can just replace the default track repository definition in ~./rally/rally.ini. Consider this example:

...

[tracks]

default.url = git@github.com:elastic/rally-tracks.git

teamtrackrepo.url = git@example.org/myteam/my-tracks.git

If teamtrackrepo should be the default track repository, just define it as default.url. E.g.:

...

[tracks]

default.url = git@example.org/myteam/my-tracks.git

old-rally-default.url=git@github.com:elastic/rally-tracks.git

Also don’t forget to rename the folder of your local working copy as Rally will search for a track repository with the name default:

cd ~/.rally/benchmarks/tracks/

mv default old-rally-default

mv teamtrackrepo default

From now on, Rally will treat your repository as default and you need to run Rally with --track-repository=old-rally-default if you want to use the out-of-the-box Rally tracks.

Testing Rally against CCR clusters using a remote metric store¶

Testing Rally features (such as the ccr-stats telemetry device) requiring Elasticsearch clusters configured for cross-cluster replication can be a time consuming process. Use recipes/ccr in Rally’s repository to test a simple but complete example.

Running the start.sh script requires Docker locally installed and performs the following actions:

- Starts a single node (512MB heap) Elasticsearch cluster locally, to serve as a metrics store. It also starts Kibana attached to the Elasticsearch metric store cluster.

- Creates a new configuration file for Rally under

~/.rally/rally-metricstore.inireferencing Elasticsearch from step 1. - Starts two additional local Elasticsearch clusters with 1 node each, (version

7.3.2by default) calledleaderandfollowerlistening at ports 32901 and 32902 respectively. Each node uses 1GB heap. - Accepts the trial license.

- Configures

leaderon thefolloweras a remote cluster. - Sets an auto-follow pattern on the follower for every index on the leader to be replicated as

<leader-index-name>-copy. - Runs the geonames track, append-no-conflicts-index-only challenge challenge, ingesting only 20% of the corpus using 3 primary shards. It also enables the

ccr-statstelemetry device with a sample rate interval of1s.

Rally will push metrics to the metric store configured in 1. and they can be visualized by accessing Kibana at http://locahost:5601.

To tear down everything issue ./stop.sh.

It is possible to specify a different version of Elasticsearch for step 3. by setting export ES_VERSION=<the_desired_version>.

Identifying when errors have been encountered¶

Custom track development can be error prone especially if you are testing a new query. A number of reasons can lead to queries returning errors.

Consider a simple example Rally operation:

{

"name": "geo_distance",

"operation-type": "search",

"index": "logs-*",

"body": {

"query": {

"geo_distance": {

"distance": "12km",

"source.geo.location": "40,-70"

}

}

}

}

This query requires the field source.geo.location to be mapped as a geo_point type. If incorrectly mapped, Elasticsearch will respond with an error.

Rally will not exit on errors (unless fatal e.g. ECONNREFUSED) by default, instead reporting errors in the summary report via the Error Rate statistic. This can potentially leading to misleading results. This behavior is by design and consistent with other load testing tools such as JMeter i.e. In most cases it is desirable that a large long running benchmark should not fail because of a single error response.

This behavior can also be changed, by invoking Rally with the –on-error switch e.g.:

esrally race --track=geonames --on-error=abort

Errors can also be investigated if you have configured a dedicated Elasticsearch metrics store.

Checking Queries and Responses¶

As described above, errors can lead to misleading benchmarking results. Some issues, however, are more subtle and the result of queries not behaving and matching as intended.

Consider the following simple Rally operation:

{

"name": "geo_distance",

"operation-type": "search",

"detailed-results": true,

"index": "logs-*",

"body": {

"query": {

"term": {

"http.request.method": {

"value": "GET"

}

}

}

}

}

For this term query to match the field http.request.method needs to be type keyword. Should this field be dynamically mapped, its default type will be text causing the value GET to be analyzed, and indexed as get. The above query will in turn return 0 hits. The field should either be correctly mapped or the query modified to match on http.request.method.keyword.

Issues such as this can lead to misleading benchmarking results. Prior to running any benchmarks for analysis, we therefore recommended users ascertain whether queries are behaving as intended. Rally provides several tools to assist with this.

Firstly, users can set the log level for the Elasticsearch client to DEBUG i.e.:

"loggers": {

"elasticsearch": {

"handlers": ["rally_log_handler"],

"level": "DEBUG",

"propagate": false

},

"rally.profile": {

"handlers": ["rally_profile_handler"],

"level": "INFO",

"propagate": false

}

}

This will in turn ensure logs include the Elasticsearch query and accompanying response e.g.:

2019-12-16 14:56:08,389 -not-actor-/PID:9790 elasticsearch DEBUG > {"sort":[{"geonameid":"asc"}],"query":{"match_all":{}}}

2019-12-16 14:56:08,389 -not-actor-/PID:9790 elasticsearch DEBUG < {"took":1,"timed_out":false,"_shards":{"total":5,"successful":5,"skipped":0,"failed":0},"hits":{"total":{"value":1000,"relation":"eq"},"max_score":null,"hits":[{"_index":"geonames","_type":"_doc","_id":"Lb81D28Bu7VEEZ3mXFGw","_score":null,"_source":{"geonameid": 2986043, "name": "Pic de Font Blanca", "asciiname": "Pic de Font Blanca", "alternatenames": "Pic de Font Blanca,Pic du Port", "feature_class": "T", "feature_code": "PK", "country_code": "AD", "admin1_code": "00", "population": 0, "dem": "2860", "timezone": "Europe/Andorra", "location": [1.53335, 42.64991]},"sort":[2986043]},

Users should discard any performance metrics collected from a benchmark with DEBUG logging. This will likely cause a client-side bottleneck so once the correctness of the queries has been established, disable this setting and re-run any benchmarks.

The number of hits from queries can also be investigated if you have configured a dedicated Elasticsearch metrics store. Specifically, documents within the index pattern rally-metrics-* contain a meta field with a summary of individual responses e.g.:

{

"@timestamp" : 1597681313435,

"relative-time" : 130273.374,

"race-id" : "452ad9d7-9c21-4828-848e-89974af3230e",

"race-timestamp" : "20200817T160412Z",

"environment" : "Personal",

"track" : "geonames",

"challenge" : "append-no-conflicts",

"car" : "defaults",

"name" : "latency",

"value" : 270.77871300025436,

"unit" : "ms",

"sample-type" : "warmup",

"meta" : {

"source_revision" : "757314695644ea9a1dc2fecd26d1a43856725e65",

"distribution_version" : "7.8.0",

"distribution_flavor" : "oss",

"pages" : 25,

"hits" : 11396503,

"hits_relation" : "eq",

"timed_out" : false,

"took" : 110,

"success" : true

},

"task" : "scroll",

"operation" : "scroll",

"operation-type" : "Search"

}

Finally, it is also possible to add assertions to an operation:

{

"name": "geo_distance",

"operation-type": "search",

"detailed-results": true,

"index": "logs-*",

"assertions": [

{

"property": "hits",

"condition": ">",

"value": 0

}

],

"body": {

"query": {

"term": {

"http.request.method": {

"value": "GET"

}

}

}

}

}

When a benchmark is executed with --enable-assertions and this query returns no hits, the benchmark is aborted with a message:

[ERROR] Cannot race. Error in load generator [0]

Cannot run task [geo_distance]: Expected [hits] to be > [0] but was [0].

Define Custom Workloads: Tracks¶

Definition¶

A track describes one or more benchmarking scenarios. Its structure is described in detail in the track reference.

Creating a track from data in an existing cluster¶

If you already have a cluster with data in it you can use the create-track subcommand of Rally to create a basic Rally track. To create a Rally track with data from the indices products and companies that are hosted by a locally running Elasticsearch cluster, issue the following command:

esrally create-track --track=acme --target-hosts=127.0.0.1:9200 --indices="products,companies" --output-path=~/tracks

If you want to connect to a cluster with TLS and basic authentication enabled, for example via Elastic Cloud, also specify –client-options and change basic_auth_user and basic_auth_password accordingly:

esrally create-track --track=acme --target-hosts=abcdef123.us-central-1.gcp.cloud.es.io:9243 --client-options="use_ssl:true,verify_certs:true,basic_auth_user:'elastic',basic_auth_password:'secret-password'" --indices="products,companies" --output-path=~/tracks

The track generator will create a folder with the track’s name in the specified output directory:

> find tracks/acme

tracks/acme

tracks/acme/companies-documents.json

tracks/acme/companies-documents.json.bz2

tracks/acme/companies-documents-1k.json

tracks/acme/companies-documents-1k.json.bz2

tracks/acme/companies.json

tracks/acme/products-documents.json

tracks/acme/products-documents.json.bz2

tracks/acme/products-documents-1k.json

tracks/acme/products-documents-1k.json.bz2

tracks/acme/products.json

tracks/acme/track.json

The files are organized as follows:

track.jsoncontains the actual Rally track. For details see the track reference.companies.jsonandproducts.jsoncontain the mapping and settings for the extracted indices.*-documents.json(.bz2)contains the sources of all the documents from the extracted indices. The files suffixed with-1kcontain a smaller version of the document corpus to support test mode.

Creating a track from scratch¶

We will create the track “tutorial” step by step. We store everything in the directory ~/rally-tracks/tutorial but you can choose any other location.

First, get some data. Geonames provides geo data under a creative commons license. Download allCountries.zip (around 300MB), extract it and inspect allCountries.txt.

The file is tab-delimited but to bulk-index data with Elasticsearch we need JSON. Convert the data with the following script:

import json

cols = (("geonameid", "int", True),

("name", "string", True),

("asciiname", "string", False),

("alternatenames", "string", False),

("latitude", "double", True),

("longitude", "double", True),

("feature_class", "string", False),

("feature_code", "string", False),

("country_code", "string", True),

("cc2", "string", False),

("admin1_code", "string", False),

("admin2_code", "string", False),

("admin3_code", "string", False),

("admin4_code", "string", False),

("population", "long", True),

("elevation", "int", False),

("dem", "string", False),

("timezone", "string", False))

def main():

with open("allCountries.txt", "rt", encoding="UTF-8") as f:

for line in f:

tup = line.strip().split("\t")

record = {}

for i in range(len(cols)):

name, type, include = cols[i]

if tup[i] != "" and include:

if type in ("int", "long"):

record[name] = int(tup[i])

elif type == "double":

record[name] = float(tup[i])

elif type == "string":

record[name] = tup[i]

print(json.dumps(record, ensure_ascii=False))

if __name__ == "__main__":

main()

Store the script as toJSON.py in the tutorial directory (~/rally-tracks/tutorial). Invoke it with python3 toJSON.py > documents.json.

Then store the following mapping file as index.json in the tutorial directory:

{

"settings": {

"index.number_of_replicas": 0

},

"mappings": {

"docs": {

"dynamic": "strict",

"properties": {

"geonameid": {

"type": "long"

},

"name": {

"type": "text"

},

"latitude": {

"type": "double"

},

"longitude": {

"type": "double"

},

"country_code": {

"type": "text"

},

"population": {

"type": "long"

}

}

}

}

}

Note

This tutorial assumes that you want to benchmark a version of Elasticsearch prior to 7.0.0. If you want to benchmark Elasticsearch 7.0.0 or later you need to remove the mapping type above.

For details on the allowed syntax, see the Elasticsearch documentation on mappings and the create index API.

Finally, store the track as track.json in the tutorial directory:

{

"version": 2,

"description": "Tutorial benchmark for Rally",

"indices": [

{

"name": "geonames",

"body": "index.json",

"types": [ "docs" ]

}

],

"corpora": [

{

"name": "rally-tutorial",

"documents": [

{

"source-file": "documents.json",

"document-count": 11658903,

"uncompressed-bytes": 1544799789

}

]

}

],

"schedule": [

{

"operation": {

"operation-type": "delete-index"

}

},

{

"operation": {

"operation-type": "create-index"

}

},

{

"operation": {

"operation-type": "cluster-health",

"request-params": {

"wait_for_status": "green"

},

"retry-until-success": true

}

},

{

"operation": {

"operation-type": "bulk",

"bulk-size": 5000

},

"warmup-time-period": 120,

"clients": 8

},

{

"operation": {

"operation-type": "force-merge"

}

},

{

"operation": {

"name": "query-match-all",

"operation-type": "search",

"body": {

"query": {

"match_all": {}

}

}

},

"clients": 8,

"warmup-iterations": 1000,

"iterations": 1000,

"target-throughput": 100

}

]

}

The numbers under the documents property are needed to verify integrity and provide progress reports. Determine the correct document count with wc -l documents.json. For the size in bytes, use stat -f %z documents.json on macOS and stat -c %s documents.json on GNU/Linux.

Note

This tutorial assumes that you want to benchmark a version of Elasticsearch prior to 7.0.0. If you want to benchmark Elasticsearch 7.0.0 or later you need to remove the types property above.

Note

You can store any supporting scripts along with your track. However, you need to place them in a directory starting with “_”, e.g. “_support”. Rally loads track plugins (see below) from any directory but will ignore directories starting with “_”.

Note

We have defined a JSON schema for tracks which you can use to check how to define your track. You should also check the tracks provided by Rally for inspiration.

The new track appears when you run esrally list tracks --track-path=~/rally-tracks/tutorial:

$ esrally list tracks --track-path=~/rally-tracks/tutorial

____ ____

/ __ \____ _/ / /_ __

/ /_/ / __ `/ / / / / /

/ _, _/ /_/ / / / /_/ /

/_/ |_|\__,_/_/_/\__, /

/____/

Available tracks:

Name Description Documents Compressed Size Uncompressed Size

---------- ----------------------------- ----------- --------------- -----------------

tutorial Tutorial benchmark for Rally 11658903 N/A 1.4 GB

You can also show details about your track with esrally info --track-path=~/rally-tracks/tutorial:

$ esrally info --track-path=~/rally-tracks/tutorial

____ ____

/ __ \____ _/ / /_ __

/ /_/ / __ `/ / / / / /

/ _, _/ /_/ / / / /_/ /

/_/ |_|\__,_/_/_/\__, /

/____/

Showing details for track [tutorial]:

* Description: Tutorial benchmark for Rally

* Documents: 11,658,903

* Compressed Size: N/A

* Uncompressed Size: 1.4 GB

Schedule:

----------

1. delete-index

2. create-index

3. cluster-health

4. bulk (8 clients)

5. force-merge

6. query-match-all (8 clients)

Congratulations, you have created your first track! You can test it with esrally race --distribution-version=7.0.0 --track-path=~/rally-tracks/tutorial.

Adding support for test mode¶

You can check your track very quickly for syntax errors when you invoke Rally with --test-mode. Rally postprocesses its internal track representation as follows:

- Iteration-based tasks run at most one warmup iteration and one measurement iteration.

- Time-period-based tasks run at most for 10 seconds without warmup.

Rally also postprocesses all data file names. Instead of documents.json, Rally expects documents-1k.json and assumes the file contains 1.000 documents. You need to prepare these data files though. Pick 1.000 documents for every data file in your track and store them in a file with the suffix -1k. We choose the first 1.000 with head -n 1000 documents.json > documents-1k.json.

Challenges¶

To specify different workloads in the same track you can use so-called challenges. Instead of specifying the schedule property on top-level you specify a challenges array:

{

"version": 2,

"description": "Tutorial benchmark for Rally",

"indices": [

{

"name": "geonames",

"body": "index.json",

"types": [ "docs" ]

}

],

"corpora": [

{

"name": "rally-tutorial",

"documents": [

{

"source-file": "documents.json",

"document-count": 11658903,

"uncompressed-bytes": 1544799789

}

]

}

],

"challenges": [

{

"name": "index-and-query",

"default": true,

"schedule": [

{

"operation": {

"operation-type": "delete-index"

}

},

{

"operation": {

"operation-type": "create-index"

}

},

{

"operation": {

"operation-type": "cluster-health",

"request-params": {

"wait_for_status": "green"

},

"retry-until-success": true

}

},

{

"operation": {

"operation-type": "bulk",

"bulk-size": 5000

},

"warmup-time-period": 120,

"clients": 8

},

{

"operation": {

"operation-type": "force-merge"

}

},

{

"operation": {

"name": "query-match-all",

"operation-type": "search",

"body": {

"query": {

"match_all": {}

}

}

},

"clients": 8,

"warmup-iterations": 1000,

"iterations": 1000,

"target-throughput": 100

}

]

}

]

}

Note

If you define multiple challenges, Rally runs the challenge where default is set to true. If you want to run a different challenge, provide the command line option --challenge=YOUR_CHALLENGE_NAME.

When should you use challenges? Challenges are useful when you want to run completely different workloads based on the same track but for the majority of cases you should get away without using challenges:

- To run only a subset of the tasks, you can use task filtering, e.g.

--include-tasks="create-index,bulk"will only run these two tasks in the track above or--exclude-tasks="bulk"will run all tasks except forbulk. - To vary parameters, e.g. the number of clients, you can use track parameters

Structuring your track¶

track.json is the entry point to a track but you can split your track as you see fit. Suppose you want to add more challenges to the track but keep them in separate files. Create a challenges directory and store the following in challenges/index-and-query.json:

{

"name": "index-and-query",

"default": true,

"schedule": [

{

"operation": {

"operation-type": "delete-index"

}

},

{

"operation": {

"operation-type": "create-index"

}

},

{

"operation": {

"operation-type": "cluster-health",

"request-params": {

"wait_for_status": "green"

},

"retry-until-success": true

}

},

{

"operation": {

"operation-type": "bulk",

"bulk-size": 5000

},

"warmup-time-period": 120,

"clients": 8

},

{

"operation": {

"operation-type": "force-merge"

}

},

{

"operation": {

"name": "query-match-all",

"operation-type": "search",

"body": {

"query": {

"match_all": {}

}

}

},

"clients": 8,

"warmup-iterations": 1000,

"iterations": 1000,

"target-throughput": 100

}

]

}

Include the new file in track.json:

{

"version": 2,

"description": "Tutorial benchmark for Rally",

"indices": [

{

"name": "geonames",

"body": "index.json",

"types": [ "docs" ]

}

],

"corpora": [

{

"name": "rally-tutorial",

"documents": [

{

"source-file": "documents.json",

"document-count": 11658903,

"uncompressed-bytes": 1544799789

}

]

}

],

"challenges": [

{% include "challenges/index-and-query.json" %}

]

}

We replaced the challenge content with {% include "challenges/index-and-query.json" %} which tells Rally to include the challenge from the provided file. You can use include on arbitrary parts of your track.

To reuse operation definitions across challenges, you can define them in a separate operations block and refer to them by name in the corresponding challenge:

{

"version": 2,

"description": "Tutorial benchmark for Rally",

"indices": [

{

"name": "geonames",

"body": "index.json",

"types": [ "docs" ]

}

],

"corpora": [

{

"name": "rally-tutorial",

"documents": [

{

"source-file": "documents.json",

"document-count": 11658903,

"uncompressed-bytes": 1544799789

}

]

}

],

"operations": [

{

"name": "delete",

"operation-type": "delete-index"

},

{

"name": "create",

"operation-type": "create-index"

},

{

"name": "wait-for-green",

"operation-type": "cluster-health",

"request-params": {

"wait_for_status": "green"

},

"retry-until-success": true

},

{

"name": "bulk-index",

"operation-type": "bulk",

"bulk-size": 5000

},

{

"name": "force-merge",

"operation-type": "force-merge"

},

{

"name": "query-match-all",

"operation-type": "search",

"body": {

"query": {

"match_all": {}

}

}

}

],

"challenges": [

{% include "challenges/index-and-query.json" %}

]

}

challenges/index-and-query.json then becomes:

{

"name": "index-and-query",

"default": true,

"schedule": [

{

"operation": "delete"

},

{

"operation": "create"

},

{

"operation": "wait-for-green"

},

{

"operation": "bulk-index",

"warmup-time-period": 120,

"clients": 8

},

{

"operation": "force-merge"

},

{

"operation": "query-match-all",

"clients": 8,

"warmup-iterations": 1000,

"iterations": 1000,

"target-throughput": 100

}

]

}

Note how we reference to the operations by their name (e.g. create, bulk-index, force-merge or query-match-all).

You can also use Rally’s collect helper to simplify including multiple challenges:

{% import "rally.helpers" as rally %}

{

"version": 2,

"description": "Tutorial benchmark for Rally",

"indices": [

{

"name": "geonames",

"body": "index.json",

"types": [ "docs" ]

}

],

"corpora": [

{

"name": "rally-tutorial",

"documents": [

{

"source-file": "documents.json",

"document-count": 11658903,

"uncompressed-bytes": 1544799789

}

]

}

],

"operations": [

{

"name": "delete",

"operation-type": "delete-index"

},

{

"name": "create",

"operation-type": "create-index"

},

{

"name": "wait-for-green",

"operation-type": "cluster-health",

"request-params": {

"wait_for_status": "green"

},

"retry-until-success": true

},

{

"name": "bulk-index",

"operation-type": "bulk",

"bulk-size": 5000

},

{

"name": "force-merge",

"operation-type": "force-merge"

},

{

"name": "query-match-all",

"operation-type": "search",

"body": {

"query": {

"match_all": {}

}

}

}

],

"challenges": [

{{ rally.collect(parts="challenges/*.json") }}

]

}

The changes are:

- We import helper functions from Rally by adding

{% import "rally.helpers" as rally %}in line 1. - We use Rally’s

collecthelper to find and include all JSON files in thechallengessubdirectory with the statement{{ rally.collect(parts="challenges/*.json") }}.

Note

Rally’s log file contains the fully rendered track after it has loaded it successfully.

You can even use Jinja2 variables but then you need to import the Rally helpers a bit differently. You also need to declare all variables before the import statement:

{% set clients = 16 %}

{% import "rally.helpers" as rally with context %}

If you use this idiom you can refer to the clients variable inside your snippets with {{ clients }}.

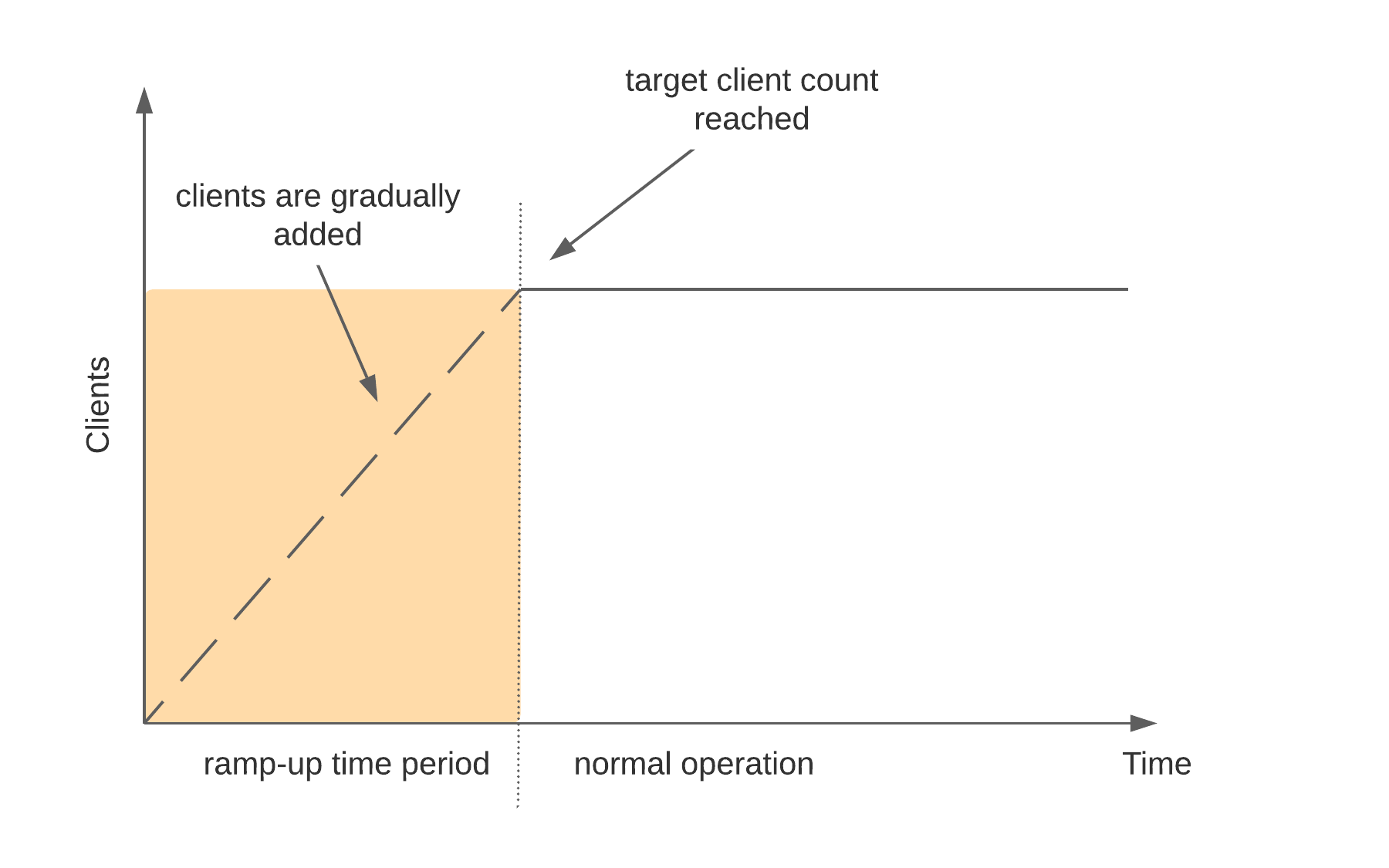

Sharing your track with others¶